We are increasingly hearing about “the Anthropocene”, both in and outside of the scientific community. The Anthropocene is a term used by geologists to describe the geological era in which we now live. It means ‘the era of Man’, i.e. the era in which we recognise Man as a major geological force, a force that alters significantly and globally the Earth.

Although this term is increasingly used, the Anthropocene is not yet officially recognised by scientific organisations of geologists as a real geological era. Indeed, to be recognised as a geological era it must meet a number of criteria, including:

- Changes can be detected at the global scale in stratigraphic materials (rocks, glaciers, marine sediments)

- A datable starting point (which also marks the end of the previous era) can be identified, such as a sudden and significant change in the chemical composition of geological strata. For example, to date the transition from the Cretaceous to the Paleogene, geologists use the iridium peak dated at 66 million years BP, which indicates the impact of a meteorite on Earth, itself located in Tunisia.

This starting point, in addition to being precise and global, must be accompanied by secondary markers in strata showing other widespread changes occurring throughout the Earth at the same time, such as changes in the chemical composition or in faunas (e.g. extinctions).

Geologists are therefore addressing the definition of the Anthropocene, and thus the definition of its starting point. A synthesis has recently been proposed to define the starting point (Lewis & Maslin 2015). To summarise, among the candidate starting points, two dates were identified to be valid:

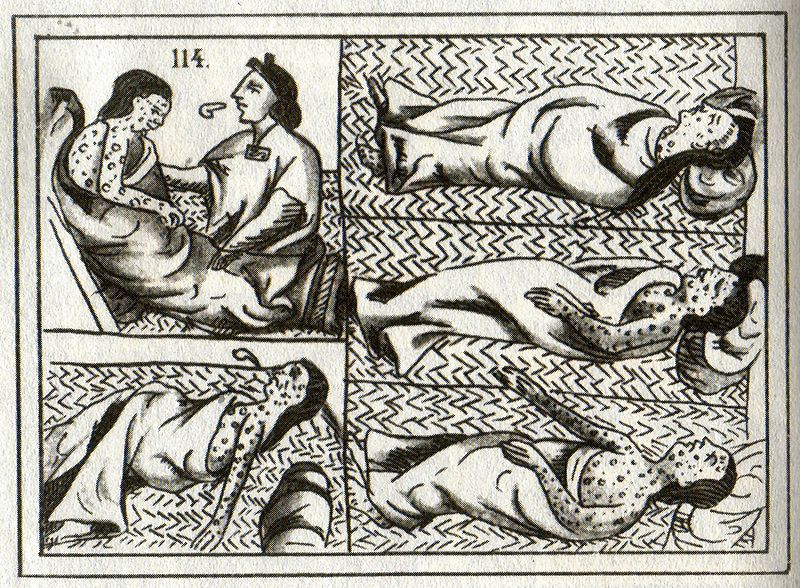

- 1610: This date corresponds to a minimum concentration of CO2 in the atmosphere. You may ask, why speak of CO2 in 1610? CO2 emissions, is it not only since the 19th century? Well no, because humans started deforesting long ago, to make room for agriculture, which resulted in a slow and gradual increase in atmospheric CO2 levels. However, there was an exceptional decrease from 1550 to 1610. In fact, 1610 is the end of a sordid period: the discovery of America by Europeans. Between 1500 and 1600, Europeans have gradually spread wars, epidemics, famine and slavery among the natives of America, which has resulted in the disappearance of 50 to 60 million people (1492: 54-61 million people estimated the Americas; in 1650: 6 million). This sinister figure is nearly ten times that of the second world war. This genocide has induced the disappearance of agriculture over much of the Americas, and in 100 years the forests gradually recovered, which temporarily reversed the CO2 trend. A decrease in the level of atmospheric carbon was observed until a minimum in 1610, before it began again to rise again.

16th century Aztec drawing of smallpox victims

16th century Aztec drawing of smallpox victims

- 1964: this date corresponds to a maximum of carbon 14 in the atmosphere. Carbon 14 is the radioactive version of carbon, and its peak in 1964, you might have guessed, is due to the multiple development and testing of nuclear weapons by various nations in the world (i.e., not only the two bombs dropped in Japan). This peak was accompanied by many other radioactive markers resulting from atomic bomb testing globally. It also marked the debut of the “Great Acceleration”, when major human-induced changes increased at the global scale, including the exponential increase in CO2 emissions.

An submarine nuclear bomb test fired in 1946

An submarine nuclear bomb test fired in 1946

The authors of the paper point out that “The event or date chosen as the inception of the Anthropocene will affect the stories people construct about the ongoing development of human societies.” Regardless of the chosen date between 1610 and 1964, the symbol is disturbing: war, terror and violence. Let this be an embarrassing reminder of what our societies are able to do, to guide our future societal choices.